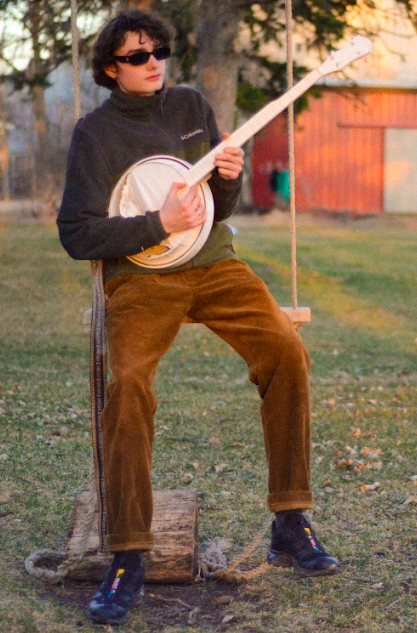

On Feb. 6, , the Philosophy Department hosted a talk from a candidate for its postdoctoral position titled “What Do Large Language Models Understand? And What Do We Understand About LLMs?” The talk was given by Jessie Hall, a postdoctoral fellow at the Institute of the History and Philosophy of Science and Technology at the University of Toronto. Over the course of an hour, Hall led the audience through past philosophical arguments on computer intelligence, where current AI falls in those discussions, and what it means for LLMs to truly understand. The talk was followed by a Q&A session with the audience.

“How do we know we have a true thinking machine?” asked Hall. She described how one litmus had been the Turing Test, in which a human interrogator has text conversations with another human and a machine with the goal of identifying which was which. If the interrogator cannot tell the difference, the machine passes the test, proving it exhibits signs of human intelligence. Modern LLMs commonly pass the Turing Test, but people are reluctant to call such behavior intelligence. The same phenomenon occurred with the Winograd Schema Challenge, another test of machine intelligence.

“Why is it not enough for us?” Hall asked. She then led the audience through the Chinese Room Experiment, a thought experiment by John Searle that argues a computer executing a program does not have intelligence. In this experiment, Searle asks you to imagine yourself as a monolingual English speaker locked in a room. A batch of Chinese writing is slipped under the door. Inside the room, you have instructions about which Chinese characters should correspond to the characters given, which you then write on a paper and slip back out the door. To somebody outside the room, it seems that you understand Chinese perfectly. As Hall explained, applying this logic to LLMs mean that large language models may imitate conversation, but they do not understand it.

Still, in the true manner of a philosophy talk, Hall challenged this conclusion. She asked the audience to consider whether computer intelligence could only come from a machine that perfectly replicated a brain and what level of brain-like architecture we would need to consider it to truly understand.

“I thought it was very thought-provoking,” said Cindy Zhao ’27. “Does behavior of understanding indicate understanding, and is there a distinction between showing that you understand and understanding? I think these are questions that are still relevant today.”

The philosophy department is in the process of hiring a postdoctoral candidate specializing in the philosophy of computing and AI-related subjects, and Jessie Hall is one of two candidates for this position who are being brought to campus.

“It’s a job interview,” said Daniel Groll, the chair of philosophy at Carleton. He explained that a campus visit is a common stage in the hiring process for many departments at Carleton. The next candidate, Camila Flowerman, delivered the talk “Is Creating AI Art Plagiarism?” on Feb. 13.

“As someone who knows nothing about the professor hiring process, I guess I’m not really qualified to speak on it, but I think she would be a good candidate,” said Inigo Hare ’28, a student in Groll’s Ethics class. “She answered the students’ questions confidently and clearly, and she seemed really knowledgeable about what she was talking about.”

“I haven’t seen the talk from Thursday, so I cannot say good relative to the other person, but I do think she gave a wonderful lecture,” said Zhao. “Her specialization is very relevant today.”

As the study of AI becomes a larger focus for departments across campus and for companies and governments worldwide, the philosophy department is attempting to bring these questions to the forefront of the conversation.

Though large language models are a relatively recent technology, artificial intelligence is at the heart of many current policy discussions. As companies such as OpenAI and DeepSeek continue to offer more advanced systems, conversations around AI have become all the more important. Carleton’s philosophy department hopes to hire a postdoctoral scholar with experience looking at those questions.

“Our feeling in the philosophy department was that obviously AI has become this utterly possibly transformative thing in all of our lives, and it’s developing at this incredibly rapid pace,” said Groll, speaking on the reasoning for hiring someone with a background in AI.

“The questions that people are asking about it, like the ones that were asked today: —‘Can it think, can it understand, could it possibly be conscious, could it be the kind of thing that has moral status?’ but also ‘To what extent should we be outsourcing parts of our lives to it? What are the worries and dangers there? could it be creative? Is it okay to use it as a tool for creativity?’— all of those are philosophical questions, and they are super important culturally,” Groll said.

Though the future of AI remains largely unknown, the department hopes that bringing in scholars like Hall will expand the conversation, both inside and outside of classrooms.

Groll explained, “I think worrying about it from a pedagogical point of view, like ‘what am I going to do in my classes? Should I use it? not use it?’ is just an instance of the broader questions of ‘What role should this play in our lives? Where is it helpful? Where is it a hindrance?’”